Objectives of the service

Manipulated satellite imagery is becoming a serious issue due to advances in generative AI. Newsrooms, analysts, and security teams risk using altered images without knowing it.

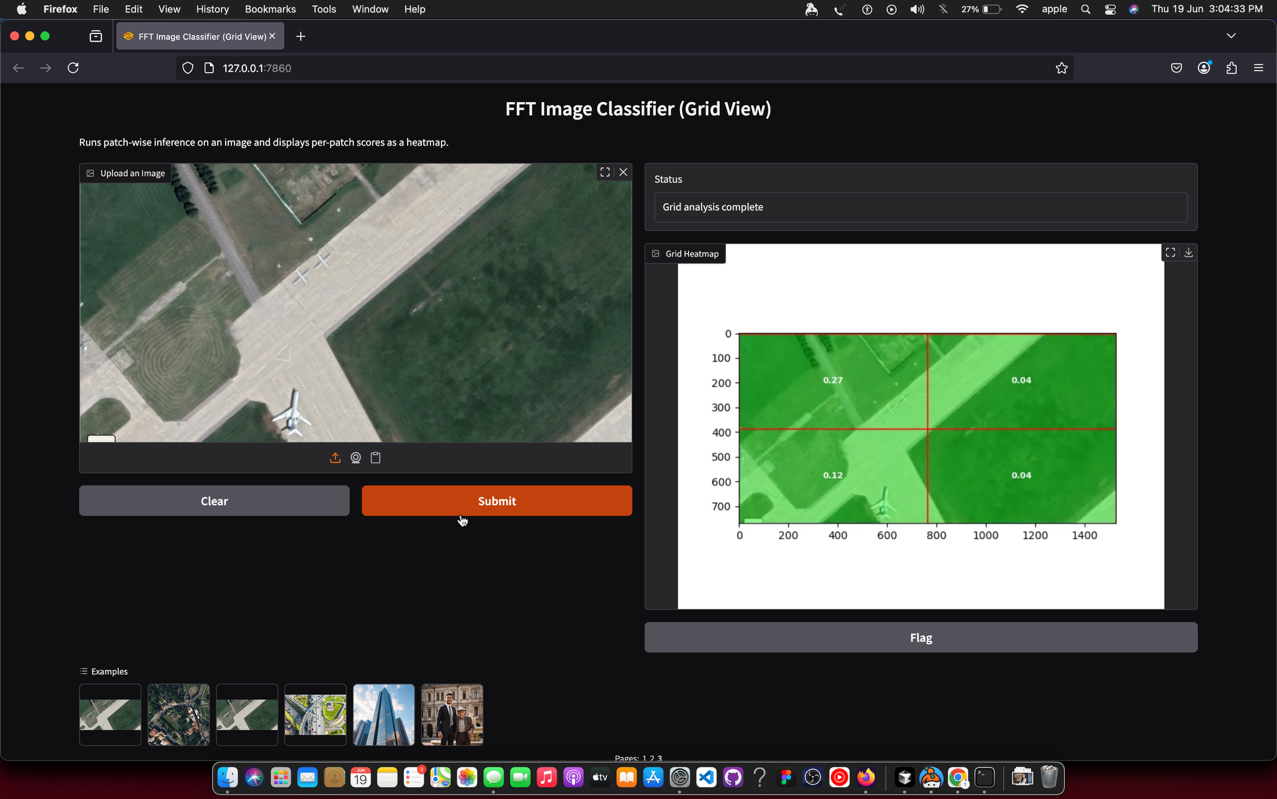

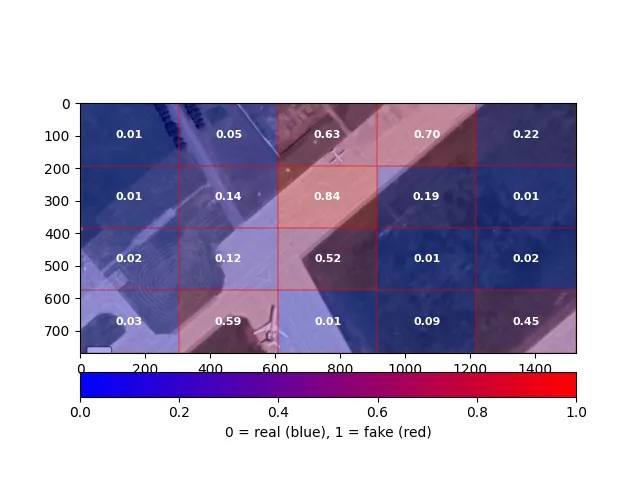

Verisat is a tool that detects whether a satellite image is fake or manipulated. Users upload an image and receive a confidence score and visual overlay showing suspicious areas.

The system is powered by machine learning models trained on real and AI-generated satellite imagery. It works through a simple web interface or can be integrated via API.

Verisat helps users verify imagery quickly and confidently, supporting better decisions in journalism, intelligence, and crisis response.

Users and their needs

Verisat targets three main user groups:

-

Journalists and media organisations need to verify satellite images used in reporting, especially during fast-moving events like conflicts or disasters.

-

Defence and intelligence analysts require tools to detect subtle image manipulation that may affect operations or policy decisions.

-

Imagery providers and fact-checkers must ensure content has not been altered or generated before distribution.

These users face growing challenges as AI-generated imagery becomes more convincing and harder to detect. They need fast, reliable tools that are easy to use and can explain why an image is suspicious.

Verisat helps meet this need by offering a detection system that can be used via a simple interface or integrated into existing workflows.

Service/ system concept

Verisat provides users with a simple way to check whether a satellite image has been manipulated or generated using AI. The user uploads an image through a web interface or API. The system then returns a confidence score and a heatmap showing any suspicious areas.

The detection model is trained on both real satellite imagery and synthetic images created by generative AI tools. It looks for subtle patterns and statistical artefacts left behind by image generation or editing.

The service includes a backend inference engine, a threat-monitoring module that tracks new generative models, and a lightweight frontend for user interaction. It is designed to be used directly or embedded in secure environments like media verification platforms or intelligence systems.

Space Added Value

Verisat is built on satellite imagery, making Earth Observation (EO) data central to both the problem and the solution. The rise of AI-generated satellite images creates new risks in how EO data is trusted and used. Verisat addresses this by using real EO imagery as ground truth to train and validate detection models.

ESA-provided satellite data ensures high-quality, trusted inputs for both training and benchmarking. By comparing real EO data with synthetic imagery created using tools like Stable Diffusion, Verisat can learn the subtle statistical differences between authentic and fake content.

This space-data-first approach allows the system to be tuned for domain-specific challenges, such as cloud cover, resolution artifacts, and geographical variation—factors that generic image detection tools often miss.

Current Status

Verisat completed its ESA KickStart feasibility study in 2025. A prototype model was trained using ESA imagery and synthetic datasets, demonstrating over 95 percent accuracy in detecting AI-generated satellite images. A public demonstrator was launched at https://verisat.ai, allowing users to test the tool directly.

The team engaged with over 30 journalists and media professionals and received interest from a defence contractor exploring integration. The service architecture and pricing model have been defined, and Verisat is now seeking follow-on funding and pilot partners to scale into production.